The 70/20/10 AI adoption model: Stack your chips towards people, not platforms

People are the secret agents of successful tech transformation

It’s the annual IT budget meeting. After all the hype and excitement in every town hall and monthly meeting this year about AI transformation, it’s time to get into the nitty-gritty and make it happen.

The big cheeses are gathering in the boardroom. They’ll decide where to make cuts in tech and other spending to fund your much-needed AI transformation programme.

Good luck. Let’s hope someone brought some decent biscuits.

The discussion may go something like this:

“How much are the software licenses?”

“The vendor bill for integration and consultancy?”

“How many people, projects and licenses do we need for a minimum viable test?“

From this horse trading springs forth your AI pilot budget.

But a year later, when you’re arguing the case for the next phase of funding, tough conversations.

“Exactly what is the ROI so far? Where are the additional sales?”

“Do we need all these licenses?”

Then the CFO digs in.

“This pilot hasn’t met its KPIs. Close it down.”

All that initial buzz about the transformational power of AI fizzles out like an empty bottle of Moet. 🍾

So far, there’s been significant spending in investing in tools and wrap-around consultancy. But it’s not working for every organisation. According to MIT’s latest report, only about 5% of GenAI pilot programmes achieve revenue growth. The vast majority stall, delivering little to no measurable impact on profits.

Why the expectation vs. reality gap?

Most of the resources are going towards the tools, technical support and integration layer. But buying AI software licenses won’t deliver a holistic business change.

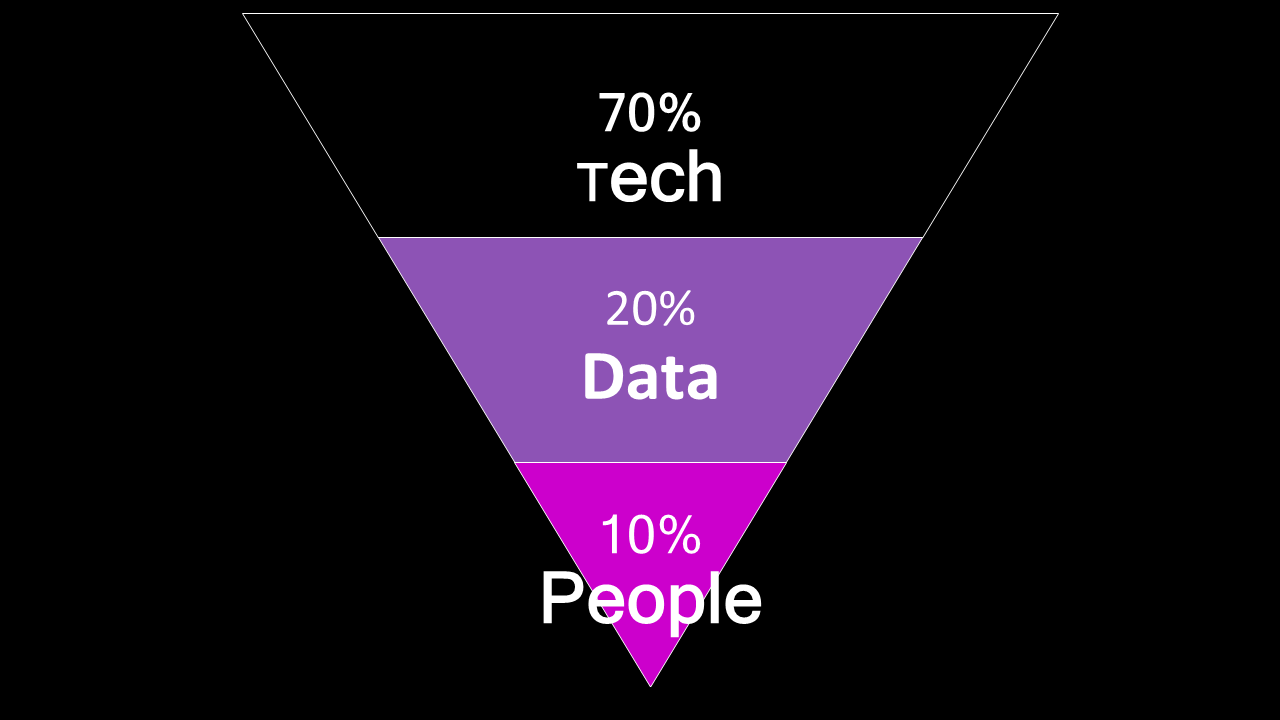

This IT and solution-led model for tech investment focuses most of the resources at the top of the pyramid into technology. Less is allocated to data and processes. And even less into skills development for the people using it.

This AI pilot panic repeats across many industries because organisations get the resource allocation backwards. Successful AI transformation flips the investment ratio.

Why AI budgets are head over arse

The typical AI investment pattern – heavy technology spending with minimal people development – contradicts everything we know about how people learn and adopt new capabilities.

Technology alone doesn’t create change; it’s the people using technology that will.

And for AI, adding the right processes and data to the mix.

The 70:20:10 learning model, based on research by Charles Jennings and others, identifies that:

70% of learning comes from experience, experiment and reflection, particularly with challenging tasks involving problem-solving

20% comes from working with others in collaboration, with feedback and action learning

10% of learning comes from formal methods like accredited courses, workshops and eLearning

Jennings’ research, from surveying 200 execs about how they developed leadership capabilities, showed that learning happens primarily through doing and collaboration.

Yet AI budgets typically invert this formula, spending around 70% on technology, 20% on data, and 10% on people development.

If you want your project to be among the 80% of failed AI projects and 30% of GenAI projects abandoned after proof of concept, stick with this formula.

If you’d like your AI pilots to have better odds of success, then flip the model.

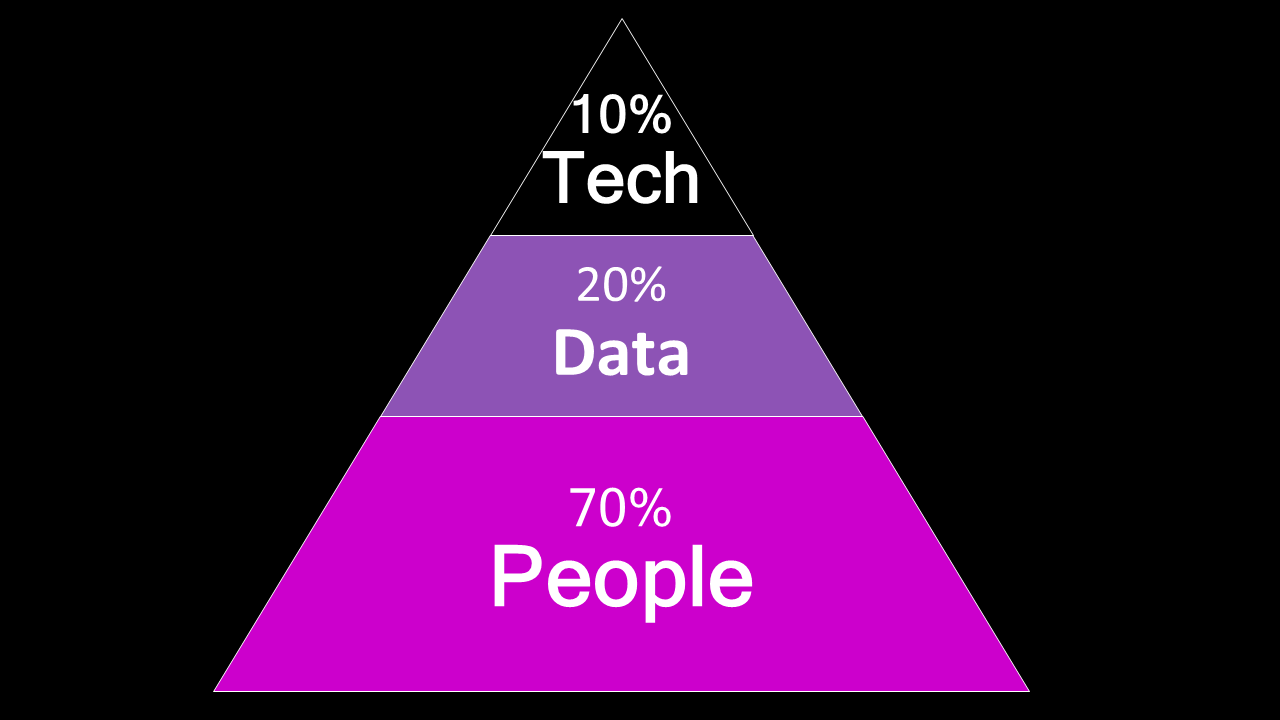

Instead, spend:

10% of the budget and effort on setting up your tech systems

20% on managing and optimising data and processes

70% on upskilling your people and their time to fine-tune systems

Organisations that flip the model can expect better outcomes. Tools get used. Employees develop AI fluency – that’s not just knowing the bare bones of how tools work but how to use them as an extension of expert capabilities.

It’s not just theory: the big shop consultancies, including BCG, are deploying a similar model with their clients (though sadly, there’s a lack of published empirical data on it)

.

Introducing the 10/20/70 framework

The 10/20/70 model for AI transformation adapts an established learning framework to technology adoption. As Jennings notes, “the numbers aren’t a rigid formula” but a reminder that change requires proportional investment in people alongside technology.

Here’s more on how it breaks down:

10% technology systems

The smallest allocation goes to what typically receives the most attention: the technology itself. You’ll need to shell out, of course, to buy and integrate AI tools. That’s not just licensing the latest LLM models for desktop applications. It could mean custom-built solutions for automation between legacy systems. Or building your ‘organisational brain’ using RAG (Retrieval-Augmented Generation) systems, or licensing a small language model.

Your tech spend includes software licenses and integration work with other systems, like investing in APIs, data connections, security configurations and user interface customisations that reduce friction.

Don’t believe the demos: nothing works ‘out of the box’. Before asking “What can the tool do?”, start with “What outcomes are we hoping to achieve?”

We’re drawn to signing deals for shiny new tech like a moth to the procurement flame. Tech integration often goes awry, not because of the tech itself but how it’s deployed and integrated.

Choose integration with existing workflows over standalone applications where users have to change how they work. This may need to follow on later to build efficiency. But new systems should first help you work better the way you do things now.

Smart organisations often discover that simpler applications with good development support and user training can outperform complex, feature-rich platforms that overwhelm users.

20% data quality and readiness

Data quality gets a small, but not insubstantial, slice of the pie because it’s the foundation for effective performance. Poor data quality reduces the accuracy of tools and leads to lost trust with users.

57% of organisations estimate their data is not AI-ready, according to Gartner, with only 37% improving data quality in 2024. This data gap explains why many AI pilots don’t work as planned.

Without fine-tuned and well-structured data, layering on automation or generative AI will at best add a party hat on the top of the turd.🥳 Improvements to data infrastructure, governance and quality give AI programmes the foundation for reliable performance.

Data quality initiatives clean existing data and standardise formats, eliminating inconsistencies that confuse automation systems. Data governance establishes policies for collection, storage and usage that comply with privacy regulations, without creating security vulnerabilities.

Effective data programmes also include bias auditing and correcting processes that address inequities in data collection and representation. This shows your organisation’s commitment to fair and accurate AI decision-making, a necessity to comply with GDPR and the EU AI Act.

Organisations that invest in data quality do better overall: systems perform better, and business intelligence and decision-making improve too.

70% people development

The largest resource goes to developing human capabilities and confidence in using the tools. This isn’t traditional change management; it’s positioning people as the secret agents of successful transformation.

To build AI fluency, invest in ongoing learning programmes. Test AI models for rigour and bias. Engage your teams in transformation rather than being passive recipients of new technologies.

People development builds AI fluency rather than basic awareness. Teams learn how and when to use AI tools, how to interpret outputs critically and integrate them into workflows.

Jennings’ research shows that 90% of employees find collaboration essential, yet only 37% value formal learning. This suggests that peer-to-peer learning and practical applications strengthen skills more than classroom-style training.

The 70% people allocation includes the change management needed to sustain transformation over time. This includes communication programmes and feedback systems that capture user experiences and improvements based on real-world usage.

Building AI fluency beyond basic training

AI fluency mirrors how expertise is shaped in any complex domain. Awareness-level training introduces basic concepts and terminology, but fluency requires hands-on experience, peer collaboration and reflective practice over time.

AI fluency programmes create multiple learning pathways adapted to different roles and knowledge levels. Customer service reps need different capabilities than data analysts or marketing managers. Rather than generic “AI for everyone” training, provide role-specific development that connects directly to daily workflow.

AI fluency includes both technical skills and critical thinking to help people evaluate AI outputs and make informed decisions about when and how to integrate AI. This thinking separates those who are AI-enabled from those who are AI-dependent; “brain rot” from leaning heavily on AI chatbots to make decisions is real.

Investing in people can seem fluffy and intangible compared to spending on licenses, servers or algorithms. But there are tangible benefits to the individual and organisation. Research from The Adaptavist Group shows that those getting 20+ hours of AI training (closer to AI fluency) report better job outcomes, satisfaction and ability to explain AI’s returns and benefits.

Measure AI fluency through competence rather than course completion rates. Track if people can solve pressing business problems, not whether they can pass a theoretical test. This hands-on approach ensures that development resources add capability rather than creating a pile of certifications.

Involve your customers as collaborators

Customer engagement is often overlooked in technology planning. The most successful transformations treat customers as co-creators rather than passive recipients. They explain how AI will improve service quality and help customers feel heard and valued in the tech transformation process. This collaborative approach builds trust and improves implementation and uptake.

Step 1 is explainability. Customer co-creation begins with transparency: explaining how AI benefits them. Explain how and why it’s used to do tasks like personalising recommendations, improving response times or enhancing service quality.

More advanced organisations co-create. They create customer advisory panels to give feedback on proposed applications and messaging. Co-creation addresses the trust gap that undermines many tech initiatives. When customers understand how the tech change benefits them and feel involved in the decision, scepticism starts to dissolve.

Get started with AI as a collaborator

Organisations that implement the 10/20/70 model report can double their wins. When all your competitors have access to the same LLMs and automation tech, yet so many are flailing. Your investment in people, testing and getting accurate results will help you succeed.

Atlassian’s recent report showed the worst results from AI adoption so far: 96% didn’t see any tangible results. But let’s focus instead on the 4% that are succeeding. They give people autonomy and treat AI as an integrated team member. Investing more in people and processes allows that collaboration to take shape.

As your teams become more AI fluent, they can identify new ways of doing things. Could no-code development tools help a customer service rep to whip up a prototype in a day? Imagine being able to create 10 versions of a campaign or user interface and putting it out to test. The winning idea may not be the ‘top’ one you would have usually created as the first option.

The 10/20/70 model doubles down on what learning research has known for decades: sustainable change happens when people are supported by the right technology and processes.

When you go into pitch for your AI budget meeting, be ready to explain how investing in people will get you ahead of the pack. Those who get this allocation right will move faster and be ready for the next wave of tech.

Just remember to bring some decent biscuits.🍪

How is your business investing resources between people and AI tools? This article is free to read. Do share your comments and experiences.