To get AI adoption right, first go slow to go fast

Forget "AI-first" bluster, AI transformation needs patience and planning

Two companies with very different approaches announce their AI programmes on the same day.

The first firm, let’s call them Bluster, Inc., unveils its “AI-first” transformation with great fanfare and a press statement promising up to 50% productivity gains within 100 days.

The second firm, let’s call them Persist Enterprises, takes a different tack. They start the work, quietly. The CEO shares a post on the intranet, stating that they have intentionally decided to WAIT. That’s not to say they’re sitting on the sidelines; it’s intentional. They’re:

Working on AI Transformation (WAIT)

By mapping user needs and testing small-scale applications first, they take steady but continual steps forward towards their goal.

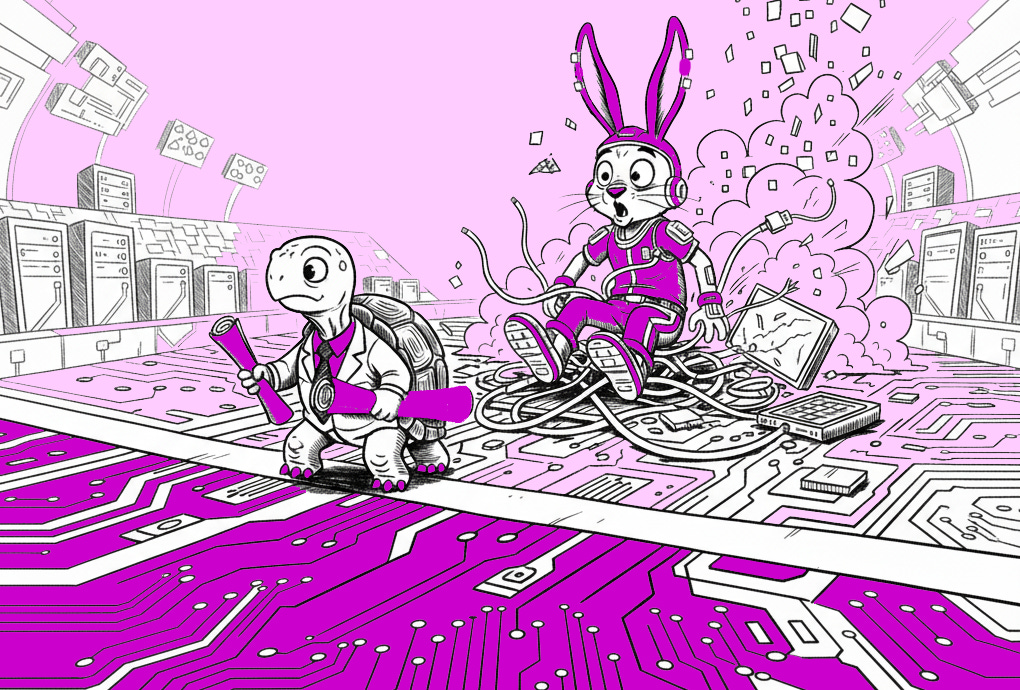

It’s the classic race between the tortoise and the hare, as originally told in Aesop’s fable way back in Ancient Greek times. Some two millennia later, it’s being played out in boardrooms and IT departments around the globe.

The hare (Bluster, Inc.) sprints ahead with flashy announcements and aggressive targets. The tortoise (Persist Enterprises) moves methodically, building foundations and testing carefully, sharing results once they’re known.

One year on, Bluster, Inc. runs out of internal investment and shareholder confidence, and quietly shelves most of its AI projects after costly failures and user resistance.

Meanwhile, Persist Enterprises has successfully integrated AI technologies across multiple departments. Employees see the benefits and queue up to take part in pilot programmes to test new product licenses and agentic workflow.

What was different:

Bluster, Inc. moved fast and broke things. Persist Enterprises used the WAIT framework. They moved slowly and fixed things. The tortoise won.

Why “move fast” fails in AI transformation

Silicon Valley’s “move fast and break things” mantra has crept into AI adoption, creating a culture where speed trumps substance. The hype radiates from the big AI players and their need to vastly overstate the benefits of their AI models to attract consumer and corporate investment. Those data centres the size of Manhattan they’re building aren’t funded on good vibes alone (or even good vibe coding).

Once the board has drunk the Kool-Aid, the wee dribbles down to individual teams, under pressure to prove the gains that have been greatly overstated. The results are predictably disastrous.

80% of AI projects fail. Meanwhile, 30% of GenAI projects in 2025 will be abandoned after proof of concept, according to Gartner, citing poor data quality, inadequate risk controls, escalating costs and unclear business value.

Between 70 to 85% of GenAI deployment efforts fail to reach their desired ROI. And on a practical level, recent stats show that the bigger you are, the harder it can be to get results, with only 5% of enterprises fully integrating AI tools into workflows.

On a positive note, individuals are building micro efficiencies, like a ‘digital twin’ that can help offer perspectives and feedback on routine tasks. But that individual benefit isn’t yet translating into pounds, shillings or even pence for the organisation’s bottom line.

The pattern is well trod: organisations rush to deploy AI without addressing fundamental AI readiness issues. Failure is yawningly predictable. Poor data quality creates a shaky foundation, leading to unexpected and often plain wrong outputs.

Many staff overseeing automated systems, or expected to use GenAI tools for efficiency, lack sufficient training. 67% of marketers say lack of training is their primary barrier to AI adoption.

That’s concerning, as the EU AI Act now mandates AI literacy. Workers managing AI systems must be able to understand how systems work and articulate the risks to meet responsible AI principles.

Leaders who shout the loudest about going “AI-first” or force teams towards an “adapt or perish” hardline strategy play a risky roulette. If the message lands on black, teams get on board the train and look at different ways of reframing problems with new tools and workflows. If (and it’s a big if) investment in AI automation allows for wholesale reform, and there’s sufficient resourcing for upskilling and training, then the bet *could* pay off. Those who achieve AI fluency typically have 20+ hours of expert training in using and designing automation systems.

But there are baked-in risks in moving fast. A lack of governance creates legal and compliance risks. Poorly delivered change management generates resistance. Each problem builds on the previous, creating expensive failures that can turn everyone off, or reach for the snooze button on your tech transformation programme.

The cost of fixing wonky AI after deployment could exceed the investment needed for proper AI-readiness. When systems make biased hiring decisions, generate off-message customer communications or breach privacy regulations, the remediation costs may include legal fees, regulatory fines and system redevelopment. That’s before considering the hidden costs of reputation damage and rebuilding customer trust.

So, with all this at risk of moving too fast, is it time to take a breath and go slow to move forward?

WAIT method for AI transformation

WAIT isn’t about being on hiatus or avoidance. It recognises that sustainable tech transformation starts with proper planning. And that involves preparing people equally to optimising technology.

It means listening to perspectives from your teams about the potential hopes and fears during the AI-readiness research phase. If some teams are keen to get going, they could become your canaries in the coalmine. Ask them to share their learning in practice and play a key role in your AI champions programme.

I love a good acronym, so WAIT is a two-for-one (or BOGOFF, if you will).

Working on AI Transformation helps you make progress in four stages:

1. Ways of working

Establish the rules of engagement with the programme, with an open door for ideas and critiques.

2. Assess

Review results and build in regular reviews and feedback. Make early judgment calls on what is and isn’t working to correct course.

3. Iterate

Change the approach, technology or tone for how you engage as needed.

4. Transform

Focus all eyes on the end goal: transforming your ways of working for good. (This one is a bit of a cheat, as transforming is the overarching outcome).

The WAIT framework acknowledges what research shows: only 30% of change initiatives succeed. Change programmes often fail because organisations underestimate the human factors involved in technology adoption. 72% of transformation failures can be attributed to inadequate management support (33%) and employee resistance (39%).

WAIT anticipates these potential failures by prioritising people and leadership alongside technology from the start.

How to implement WAIT

1. Ways of working

The idea that you can install a new technology that will let you wave a wand and automate all the messy processes where other tech innovations failed is pure corporate fantasy.

Sam Altman’s ill-placed comments that AI could automate 95% of marketing tasks done by agencies (way back in March 2025, during peak AI hype season) are pure naivety.

Anyone involved in a complex process, particularly at the enterprise-level, knows there’s usually a messy box of different systems akin to leaving every cable you’ve ever had winding itself inexplicably into a spaghetti of versions, processes and data categorisation. This all needs careful unpacking and matching before true automation can happen.

I worked on a project for an organisation with multiple website systems. Each had a different typology for tagging and labelling web content. So the web page system may classify an article about a Christmas fair ‘Christmas’ ‘Event’ Seasonal’ Markets’, while the event system may call it ‘Xmas’ ‘December’ and ‘Family events’. And that’s something as innocuous as a hidden data field in the system back end. All data would need harmonising before the systems can feed into one another or be useful for a third-party service or automation.

Establishing ways of working is two-fold:

1. Set out the guardrails for your AI transformation project:

Who will be involved?

What are our goals?

What are our rules of engagement?

2. What systems and processes can you most easily unpick from the tangled cable box?

It’s a reverse engineering exercise: by documenting your processes, you can work towards a standardised delivery, which means you can start to automate.

Process mapping is thorny and complex. Fortunately, GenAI tools are really great for working this out. You can instruct your LLM to interview you about how the process works now, and ask others in your function to do the same. From this, a wider review using your LLM can see the common challenges and bottlenecks.

Warning: Things can appear to be the same, but actually show up quite differently. If the widget should come out of the machine in a uniform shape, colour and size – or the automated sales email should always pull the same data based on the customer’s last purchase – then game on. But often things, and demanding customers, show up in incrementally different ways each time. This makes it hard to systemise.

Fast food is a commodity retail which many try to automate, as most workers are completing the same actions each time to fulfil orders. But in reality, fast-food workers have to make hundreds of micro-decisions based on how customers show up. Late-night drunken orders, a person who speaks a different language may struggle to understand how to order. Or in the case of Taco Bell, a japster customer may try to overload the system by ordering 18,000 cups of water.

Establish the most consistent things you do. Or if there aren’t any, what mid- to high-value activities do you do that could benefit (or wouldn’t unduly suffer) from being done a little faster, or more uniformly?

You should have completed an audit to assess data quality in the AI-readiness phase. In the WAIT phase, it’s time to put it into test mode with pilots. Your technical architects and security experts need to identify risks and put in place secure solutions.

You also need to strengthen your approach towards responsible AI best practices. A critical activity to align on during this phase is AI governance – establishing guardrails for data governance, regulatory risks and ethical approaches to algorithmic bias.

2. Assess and test

Unlike traditional technology pilots, which focus mainly on technical functionality, WAIT pilots prioritise learning from commercial results and participants’ experiences. To do this, you need to plan how you will measure success.

Measuring the commercial results in an AI pilot is usually the top goal, transforming AI from a tech experiment into a business strategy. Traditional technology pilots measure improved system functionality. This means if you meet the project objective, like rolling out 3,000 AI software licenses to remote desktops, then the project is recorded as a success.

In the end, measuring commercial impact is the only metric that really counts when it comes to accruing new resources, which you’ll need as AI projects become more complex and expensive.

Going from technical delivery to commercial success means planning for people and process uptake.

So the project goal could be to help the sales and marketing team with personalising customer service. Here’s how it could break down.

Step 1:

Evaluate the suitable service provider or automation solution in market.

Step 2:

Concurrently, map existing process to codify the steps, skills and resources.

Step 3:

With your selected vendor, plan how the technology can support or streamline the current method.

Step 4:

Establish measurable goals for what the improved process will save or allow you to do differently or better.

A note on the economics:

Your competitors have access to exactly the same tools and are most likely thinking the same thing right now. A more ambitious target is to show how the new automated method can help you grow. Think different. Not just the same but a bit tidier or slightly faster.

Now you can begin the test phase.

There’ll be some hypotheses and assumptions (and some well-intentioned optimism of best-case results from your vendor). Plus, hopefully, an agreed-upon plan with benchmarks to run upwards from.

You can measure the direct impacts – like error reductions, quicker conversions and end revenue. Then a somewhat stickier metric: impact on employee productivity. Research shows that most GenAI tools are helping employees with small wins and admin efficiency. But it’s not netting out to productivity gains wholesale for the organisation.

This may not be your objective here; if teams are happier and feel more supported and productive, they will stick around longer and do more, with the sharper members lending their talents to finding you new opportunities.

Finding out how they feel about it could support secondary benefits. In your employee engagement survey, can you include a question about the tech transformation programme?

GenAI tools can create an innovation advantage for new ventures. Like vibe coding a prototype of a new service, then putting it out for testing for a fraction of the time and cost of a full-scale new service plan. 10 quick experiments tested in the wild with friendly advocate customers could turn into a new service opportunity.

Automated data reporting could help you make decisions more quickly or serve customers with new products. Perception by stakeholders or customers about your AI innovation is also critical for some industries, if you’re in a tech or innovation-focused sector, to stay competitive.

Then there’s the ‘backroom’ benefits of legal and compliance. Here, AI may create more risks than gains. That needs factoring into your responsible AI planning.

All this measuring can seem daunting. It could evoke theories of a ‘time and motion’ study. I had a friend who did this consultancy in the 1980s. He would literally “shadow” by hovering behind the subject’s desk to see what they did, spying to report on the supposed ‘wastage’. Invariably, there was none. It was more of an arse-covering exercise.

However far AI-enthused your org is, these technologies and associated consultancies are pricey. So, you need to have your eyes on the prize: measurable results in improved process, leading to optimised delivery or enhanced revenue, and importantly, measuring employee feedback linked to job satisfaction and skills. That’s belt and braces arse-covering.

The how and what you measure will depend on the schema of your project and the ways of working inside your organisation.

At a basic level, you can ask team members who took part in the pilot what they can do more of now that they couldn’t before. Or what new opportunities this way of working could open up. The answers may be unexpected or illuminating.

Assessing the participant experience

It’s the people, not the technology, that determines if tech transformation will succeed or fail. This is fuzzier as it’s typically a more subjective assessment about whether your teams feel they’re getting any benefits from the new approach.

There are many reasons why projects can go awry. Many link back to a lack of foresight and planning.

International conflict produced an interesting theory on how we frame this. US Secretary of Defence Donald Rumsfeld in the 2002 hunt for, alleged “weapons of mass destruction” (WMDs) in Iraq, talked about categorising the things you do and don’t know.

“There are known knowns; there are things we know we know.

We also know there are known unknowns; that is to say we know there are some things we do not know.

But there are also unknown unknowns — the ones we don’t know we don’t know.”

If that sounds as cryptic a riddle as the WMDs they failed to find, it is. Ambiguity is at the heart of any business transformation. The more complex your organisation is, the more the fog of uncertainty hangs heavy.

We need to define what this looks like for our change programme. In the context of AI adoption, a known known might be that your customer service team currently handles 1,000 support tickets daily with an average resolution time of 55 minutes.

A known unknown would be how much an AI chatbot could reduce ticket volume by dealing with more issues before a support ticket is lodged. We can make an estimate and measure against it.

An unknown unknown might be discovering that AI-generated responses, while technically accurate, create a subtle shift in customer perception of the trustworthiness of your brand that affects loyalty in ways you never anticipated measuring. We can also flag this as a risk and make contingency plans for working through acceptance tests within the responsible AI planning.

Let’s see another example for measuring people participation.

A known known is that people need to understand the programme aims, why change is needed and what their manager’s expectations are for their participation. This can be planned for with good change management and internal communications. AI projects are, in this aspect, exactly the same as any other business transformation.

Another known known is that people must have sufficient training and education to progress towards AI fluency. Without access to time and experts to use new tools or follow a new process, expect your project to fail.

Known unknowns may stem from findings from the AI readiness stage, and some could be anticipated. Like cultural differences, which mean uptake differs according to different backgrounds and nationalities. High-context cultures, like Japan and some Arabic countries, rely on implicit communication, relationships and situational understanding – areas where AI cannot add much value to the decision-making or workflow and may even damage it.

Then there are the unknown unknowns. This is why WAIT pilots are so valuable. They’re designed to take the time to consider the range of potential issues to convert unknown unknowns into known unknowns. Then eventually the fog clears to become known knowns, reducing the risk of scaling your AI initiatives without a complete understanding of its limitations.

What to measure and how

To turn the subjectivity or ‘vibes’ of what’s working into more tangible data points, you’ll need to get out your measuring stick. As well as the usual employee survey, consider conducting a focus group combined with one-to-one interviews with users at each end of the scale: enthusiastic adopters, regular users and reluctant participants. You want to shake out the most honest responses to learn what should be kept and what must change before a wider rollout.

Get more accurate data on tasks and tool time use from system logs. People tend to either over or underestimate answers to questions like ‘how long did you spend on the task before?” Training and platform hours should be systematically measured.

Questions you can use in your participant survey before the AI pilot:

How do you feel about AI tools now? (negative-positive scale)

How confident do you now feel about your ability to work effectively with our tools?

Ask them again at the end of the pilot to determine the before and after change.

After the pilot stage, ask:

To what extent did the AI tools enhance or complicate your job responsibilities?

Do you feel you now have the knowledge and skills to use the tools confidently?

After the pilot, what are you and your team doing differently now than before?

What concerns do you still have about using AI in your work?

By buttoning down the assessment stage, you will have a clearer idea of what needs to be done to decide whether to fix and roll out, or ditch and roll off, the pilot.

3. Iterate and improve

Spend more than a few minutes in conversation with a Greek, and you’ll soon hear the expression “σιγά σιγά” (“ciga, ciga”) or “slowly, slowly.”

In one of the hottest countries in Europe, where culture has stood solid as the Acropolis for several millennia in the lands where Aesop wrote his tortoise and hare fable, doing things too fast can have unintended consequences. The expression invites the listener to self-reflect and have patience for resolving the situation at hand calmly.

The WAIT approach to tech transformation invites this thinking of thoroughness and patience as a virtue.

Now you’ve got the results from your pilot survey. The iterate phase is about making adjustments to the technical practice and considerations about how the human side – accelerating knowledge and winning hearts and minds – should evolve.

The headline in Fortune’s reporting of MIT’s study about enterprise AI adoption shouted loudly that 95% of GenAI pilots are failing. The headline gained traction as there are equally as many forces that want AI projects to fail as the noisy and mighty hype narrative that wants to see success. You’ll probably come across some of these negative Nellies without your organisation.

But look beyond the headline, and the situation is less dire. The definition of ‘success’ in the report was super narrow, with a tangible ROI within six months of the pilot. The 5% of projects in MIT’s study that did work made good gains by ensuring AI was successfully embedded in the team and collaboration practices.

Be more in control of your destiny. Define your own definition of success, then measure against it. More streamlined and part-automated processes using cutting-edge tools may raise your team’s project quality and ability to attract and retain talent.

As an inclusive AI leader, your challenge is to own the narrative around defining success, then relentlessly drive towards it. Cheerlead the efforts, even if not all of them work out (fun fact: it’s unlikely they all will).

If you have the confidence to push the edges of a definition of innovation, do so. Although AI innovation should be the focus for known business challenges, it’s an experimental technology, and no one knows all the answers yet.

Your fancy tech transformation consultant knows a bit more, but not the full picture of your complex organisation back history. Innovation mostly happens by tinkering and playing at the edges. Matt Ballentine from Equal Experts invites us to rethink enterprise AI approaches through connection and experimentation. Go play a little.

4. Transform and scale up

More tech projects fail than succeed. Transformational success or failure is largely based on human factors. And the factors which distinguish success from failure consistently relate to people management.

Not quite as apocalyptic as the MIT study, but close, research from IDC and Lenovo found that 88% of proof-of-concept (PoC) projects don’t make it to wider deployment. That’s only about 4 in 33 projects succeeding.

This indicates a high appetite for tech investment and perhaps a little bit of ‘shiny object’ meets ‘keeping up with the Joneses’ syndrome. Yet most are pulled downwards by low levels of organisational readiness in data, processes and tech infrastructure.

The secret sauce for success is classic change management. McKinsey research shows that successful transformations rely on holistic approaches that address organisational capabilities alongside technical changes. Those who invest in change management, communication and skills development are 1.5 times more likely to report transformation success.

The WAIT framework treats people development as a prerequisite, not an afterthought. Each phase includes specific activities designed to build confidence, capability and buy-in among the folks who ultimately determine whether AI systems create value or collect digital dust.

To paraphrase the villain in the cartoon Scooby Doo:

“I would have gotten away with it, if it wasn’t for you pesky people.”

Even with the best programme managers, scrum masters and meticulous planning and budgeting, tech transformation projects fail if they focus all efforts on process and too little on people. The perfect formula for AI transformation is a 10 / 20 / 70 split:

10% effort on setting up your tech systems

20% on data and processes

70% on upskilling your people

More about this method in a future article.

AI projects fail when they treat people as pesky “roadblocks” to overcome rather than the principal cogs that need to be fine-tuned to make the machine hum. Map out the people bits in your planning and risk logs. Turn people from a consultation phase at the end of the process to a consideration at each stage.

Patience is a virtue for tech transformation

The WAIT framework is about moving thoughtfully but continuously. Get this planning stage right, and these positive outcomes could follow:

Higher adoption rates

Once a new technology is introduced, there’s an initial flurry of excitement with ‘newness’ that’s often followed by a steep usage decline. Preparation creates confidence with your system owners and users, which drives sustained engagement. You signal to your teams that you’re in it together, and for the long haul.

Better return on investment

The CDO Insights 2025 survey identifies data quality and readiness (43%), lack of technical maturity (43%), and shortage of skills and data literacy (35%) as the top obstacles to AI success. The AI-readiness planning and WAIT frameworks combined address all three for a project that may not blow the lid off ROI, but should at least make the programme way less likely to fail.

Risk mitigation

Systematic testing and responsible AI governance planning can help organisations avoid costly mistakes like biased decision-making, privacy violations or gaps in regulatory compliance.

Organisational confidence

A more prepared tech transformation means your projects are less likely to fail. This can give you the edge on competitors who are investing similar efforts and resources in projects that are less likely to succeed. Internal expertise and capabilities become stronger when everyone feels heard in the process. This puts the organisation in a good position to benefit from future technology innovation waves.

When NOT to WAIT

WAIT isn’t right for every situation. Some need fast action. AI tools like cybersecurity and data privacy may need to be rolled out faster to avert rapidly evolving risks.

Knowing that some decisions need to run at speed, it’s useful to have a ‘fast track’ process for either getting everyone to prioritise the usual ways of working on risk assessment or shortcutting steps to get there faster. Governance isn’t optional, but if there’s a greater risk at play, there could be a ‘lite’ version of your usual process as the risk of not doing it may be greater than waiting to do it absolutely correctly.

In your mapping of potential tasks and use cases for AI pilots, you may identify some that are lower risk – like using GenAI tools for internal comms content and planning. These tools could be switched up at a later date, as most AI tools are currently utilities rather than a ‘moat’ technology.

Getting more teams started with a few tools and processes could be a smarter strategy than waiting until the end of the planning stage or hoping a miraculous all-purpose AI will be launched soon. Also consider that “shadow AI”, when people use their own (often free-tier) GenAI accounts for work, could potentially cause data privacy risks.

How to frame the WAIT strategy

How you talk about your AI planning stage is critical to get buy-in.

For internal and external stakeholders, frame WAIT as “Working on AI Transformation”, never as “Waiting to implement AI.”

Emphasise that your work is active, measurable and include live examples of the test briefs you’re starting with.

AI transformation needs to align with existing change management processes. Make sure your risk planning and reporting align with corporate standards. AI transformation shouldn’t be seen as exclusive or requiring a different approach.

Organisations that plough their own furrow with careful preparation can also build up their expertise, reducing dependence on expensive external consultants. You can demonstrate that AI transformation can be inclusive and sustainable, rather than disruptive and anxiety-provoking.

Beavering away behind the scenes on AI transformation while competitors rush straight to deployment isn’t moving slowly. It’s building the solid foundation of underpinning technologies and critical innovation thinking that puts you in a strong position for the future.

Is your organisation rushing headlong or working steadily forward? What challenges have you seen with “move fast” approaches? This post is free to read and share. I’d love to get your thoughts in the comments.